The secret to a successful survey lies in the preparation. Amelia Earhart’s words resonate deeply when it comes to survey ventures. To conduct a survey that ensures high-quality data collection to inform decision-making, you need to create a survey administration guide.

This guide is the cornerstone of any survey project. It paves the way for effective and efficient data collection. If multiple personnel are administering the survey, it’s crucial to synchronize all essential tasks through group training and written procedures.

Key elements of a survey administration guide include:

Timeline. Define the timeframe for survey administration as well as essential pre- and post-survey activities. An example of tasks to include in the timeline for an online survey is as follows:

Communications Plan. It’s important to communicate before, during, and after the survey administration. Here’s an example of messaging to send to your survey participants:

Before Administration. We are dedicated to understanding what is going well and where improvements may be needed for our program. To do this, we need your feedback. Please look for the survey link (date) and complete it by (date). Your feedback matters so we can ensure we are providing the highest quality services. Thank you!

Beginning the Survey. We are looking forward to your feedback about our program. Please go here (insert link) to complete a survey. Your feedback will help us with program planning as well as to understand program impact. Please submit your responses by (date). We will share a summary of the survey results in (month). Thank you!

After Administration. Thank you for participating in our survey! We received a total of (number of surveys). We truly appreciate your time and insights. We are in the process of analyzing the data and anticipate sharing results by (month). Thank you!

Survey Materials. If administering a paper survey, include a materials checklist.

Script. If administering a paper survey, develop a script that all survey administrators will use. Below is a sample script:

Our program offers services to support you. We would like your feedback to help us understand how we’re doing. Please fill out the survey as honestly as possible. There are no right or wrong answers, we only want to know what you think about our services. We will not ask you to put your name on the survey, so no one will know your answers. If you do not understand a question or need some other kind of help, just ask me. When you are finished, please put your completed survey in this box. Thank you!

Let’s look at an example. The Northwest Housing Alternatives (NHA) Resident Survey was administered in person by the six Resident Service Coordinators (RSC) at 31 properties. How did we make sure the survey was administered consistently across all properties?

A group training with written instructions on how to administer surveys made sure all staff collected surveys consistently and successfully. We agreed on a two-week timeframe in which all RSCs administered the surveys in person at each location. The written instructions included the same script each RSC would use, whether they were administering it in a group setting, such as a pizza party, or going door to door to administer the survey individually. This process ensured that all residents received the same instructions.

Creating a survey administration guide is not a difficult or time-consuming task, but it requires careful planning and attention to detail. You can use existing templates or examples from similar surveys as a starting point, but you should always customize your guidelines to fit your specific survey context and audience. By creating survey administration guidelines before doing the survey, you can ensure that your survey is well executed and well received.

Want more? An entire chapter in my book, Nonprofit Program Evaluation Made Simple, is dedicated to survey design. Get your copy today.

One of the best ways to improve your program is to create a feedback loop with your participants. A feedback loop is a way of gathering, processing, and acting on the feedback you get from your participants. This helps you to better understand their wants, needs, and difficulties, and adjust your program accordingly. It also shows them that you appreciate their input and care about their experience.

However, creating a feedback loop is not just about sending a survey. You need to communicate effectively with your participants about why and how you are collecting their feedback, and how you will use it to improve your program. You also need to share the outcomes and actions taken based on the feedback and encourage them to join further conversations or assessments. This way, you can establish trust and involvement with your participants, and make them feel part of your program’s achievement.

When deciding what to share with you program participants, consider the following questions:

Make sure to share the main results and steps within a month of when they filled out the survey. This shows to your program participants that you value their feedback and are dedicated to improving your services. It also helps to build trust and involvement, which can lead to better results and retention. You can use different ways to communicate your outcomes, such as email, newsletter, website, social media, or webinar. Make sure to emphasize the main points, insights, and suggestions from the survey, and explain how you intend to apply them or deal with any issues.

Let’s talk about a truth in our culture. We often operate from the neck up. Our brains often dominate our actions, here’s an example.

First thing in the morning, my brain is ticking through my to do list, my body is going through the motions of getting coffee, brushing my teeth, getting my kids moving, and my heart is completely excluded from what’s happening. And then I can’t find my keys, or my phone. Or both because I was so stuck in my head. My heart and brain were not communicating, and I was not present at what was around me.

Sound familiar?

As a program evaluator operating from the neck up only, or just using your brain, excludes a critical part of being an effective evaluator – the heart. If you’re looking to build your capacity in program evaluation, ensure your brain and heart are both fully present and communicating.

What do I mean by the brain and heart? Well, the brain refers to the cognitive aspects of evaluation, such as logic models, survey design, and analysis techniques. The heart refers to the emotional and relational aspects of evaluation, such as building a culture of learning, trust, and empathetic listening.

The HeartMath Institute explores the role of the heart in human performance in their e-book Science of the Heart which states,“… communication between the heart and brain actually is a dynamic, ongoing, two-way dialogue, with each organ continuously influencing the other’s function.”

Why is it important to include both the brain and heart in program evaluation? Because it’s not just a technical exercise. It’s also a human one. It includes working with data, and with people. To build your capacity in program evaluation, you need to use your brain to analyze and interpret the data objectively. You also need to use your heart for authentic listening, building trust, and cultivating a learning culture.

So, as you build your program evaluation skills such as creating logic models, surveys, and more. Be sure to bring your heart into the process. It matters. It will empower you to employ a holistic approach to creating a realistic and meaningful program evaluation process.

For more insights, check out my plenary session from the 2020 American Evaluation Association annual conference. The theme was Shine your Light, and I touched on heart/brain communication, building a culture of evaluation, and more. Check it out here – https://youtu.be/KUuNhQPXyyw

A literature review is a great place to start your survey design. In some cases, surveys have already been created to measure whatever it is you want to measure. This can save you a lot of time and effort if you find something that already exists.

It starts with a question. Then, a deep dive into journals, articles, and other resources to review published studies, tools, and other literature on the topic.

For Candlelighters for Children with Cancer: Family Camp program, we wanted to explore what surveys already exist for similar programs. The Family Camp is a three-day camp for families experiencing pediatric cancer. Their outcomes are:

We did a search in Google Scholar and Google for the following phrases:

The camp-specific searches led to the evaluation reports from other camps that were good examples of how they addressed similar outcomes. Once some resources surfaced, the bibliographies of those articles led to more resources. Ultimately, we found a range of surveys from similar programs. These helped us develop the participant survey for their program.

Literature review questions to consider:

This may be someone on staff, university student, or volunteer with research experience. Be sure whoever does it, they document all the resources gathered in a bibliography. This effort generally takes 10-15 hours for someone with experience. So, plan accordingly.

To get your data, assign one person to oversee the implementation of your program evaluation plan. This includes allocating time for the literature review to be completed and used to inform survey design.

(This is an excerpt from Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs., chapter 13, pages 153-155.)

Want more? Check out my book, Nonprofit Program Evaluation Made Simple. There’s an entire chapter dedicated to survey design.

I’m excited to share with you that Evaluation into Action, LLC, is now an affiliate partner with Joan Garry’s Nonprofit Leadership Lab. I’ve been a big fan of Joan ever since we crossed paths a few years ago. What truly caught my attention is her 14 attributes to a thriving nonprofit. One of them was to have clear and measurable outcomes. And that means, you need to have program evaluation in place.

One great way to check out how valuable the Nonprofit Leadership Lab can be is to join Joan Garry for a jam-packed workshop that will give you the practical advice you need to strengthen your org and lead with more intention. Here are a few webinars you can take advantage of. Click the link to reserve your seat!

I’m so grateful for ongoing positive feedback for my book, Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs. Thanks to Jane T. for her review.

“This is my go-to book for program evaluation. Chari spells out in very clear language the process/steps of evaluation and gives great examples of how this works. It’s all about the impact of our programs and the “so what” of results. I teach program evaluation and design and recommend this book to my students, as well. Buy this book if you work in nonprofit – you won’t be sorry you did!!!!”

If you have my book, please consider writing a review and rating it wherever you purchased. It truly helps others find it more easily.

Alignment is the key ingredient to create quality surveys.

Let’s unpack that.

Alignment is defined as the proper positioning of parts in relation to each other. In program evaluation, these parts are: (1) measurable outcomes, and (2) survey items. The alignment between these two parts is the secret sauce in knowing what to ask in your survey. First, you have to define the impact you expect your program to make, and then you can build survey items based on the defined impact.

A measurable outcome statement, also known as a change statement, provides clear direction for what you expect to change as a result of program activities. Let’s walk through an example.

Northwest Real Estate Capital Corporation (NWRECC) manages affordable housing communities. Fourteen of those communities offer a resident services program, in which a Service Coordinator connects residents to a range of services and resources, such as home management assistance, health and wellness coordination, social events, and educational opportunities. We worked collaboratively with NWRECC to develop five measurable outcome statements for their resident services program that align to the program activities.

Let’s walk through one outcome to illustrate how the alignment process works.

Building Community Outcome: Improve socialization within the community, which will lead to reduced feelings of stress and isolation.

An effective evaluation plan to measure program impact and implementation focuses on aligning all the key components, including the outcomes, activities, and measurement tools. In this case, the survey items that were developed for a resident survey align with the program components, as explained in the following example for the Building Community outcome.

Outcome to Survey Alignment

How do we measure if residents improved their socialization within the community?

In the survey, we asked what services residents participated in. One of the options was social events. This response choice helped us to understand how many residents participated in social events across the 14 properties. If the outcome is to improve socialization, we need to know who participated in socialization opportunities.

How do we know if they improved socialization within the community? Reduced feelings of stress? We ensured that survey items specifically aligned to these aspects of the Building Community outcome as shown in the following questions. Do you see the alignment?

There were more survey items aligned to this outcome than the two included here, but this excerpt is just enough to help you understand how alignment works. Alignment between what you expect to change and the content of your surveys will ensure you are measuring what matters.

Want more? Check out my book, Nonprofit Program Evaluation Made Simple. There’s an entire chapter dedicated to survey design.

Want to learn more? Join me at 11:00 a.m. PT on Thursday, October 13 for Building a Culture of Evaluation.

Want to learn more? Join me at 11:00 a.m. PT on Thursday, October 13 for Building a Culture of Evaluation.

During this 20-minute webinar hosted by American Evaluation Association, we’ll explore issues around an organization’s willingness to engage in the process through real-world examples and a concrete process on how you can build a culture of evaluation. More information & registration here.

To receive a discount, use the promo code evalrocks. If you have any trouble with the discount code or registration, please contact Benjimin at bborucki@eval.org. Please note: you will have to set up an American Evaluation Association account in order to register, even as a nonmember.

I’m excited to share with you that Evaluation into Action, LLC, is now an affiliate partner with Joan Garry’s Nonprofit Leadership Lab. I’ve been a big fan of Joan ever since we crossed paths a few years ago. What truly caught my attention is her 14 attributes to a thriving nonprofit. One of them was to have clear and measurable outcomes. And that means, you need to have program evaluation in place.

One great way to check out how valuable the Nonprofit Leadership Lab can be is to join Joan Garry for a jam-packed workshop that will give you the practical advice you need to strengthen your org and lead with more intention. Here are a few webinars you can take advantage of. Click the link to reserve your seat!

I’m so grateful for ongoing positive feedback for my book, Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs. Thanks to Jane T. for her review.

“This is my go-to book for program evaluation. Chari spells out in very clear language the process/steps of evaluation and gives great examples of how this works. It’s all about the impact of our programs and the “so what” of results. I teach program evaluation and design and recommend this book to my students, as well. Buy this book if you work in nonprofit – you won’t be sorry you did!!!!”

If you have my book, please consider writing a review and rating it wherever you purchased. It truly helps others find it more easily.

I’m going to get fired.

That’s what you might think if your boss emailed you a report showing your program isn’t meeting its goals.

I’m going to get fired.

Let’s unpack that.

Let’s rewind that, and try this instead –

Your boss calls you into their office and says, “We got this report showing the program isn’t meeting its’ goals. Let’s dig into the data and see what we can learn about what’s not working. Then we can brainstorm together what we can change in the program plan to improve progress toward the goals.”

Better, right? Maybe getting fired is no longer the fear. Instead, there’s genuine curiosity of how to improve to meet program goals.

That’s how program evaluation can support your program, by understanding what is going well and where improvements are needed. It does require a commitment to get curious when something isn’t working. It comes down to these three concepts:

This helps to build a culture of evaluation in your organization. Cultivating this kind of culture emphasizes learning to ensure you are in fact making the difference you intend to make.

Want to learn more? Join me at 11:00 a.m. PT on Thursday, October 13 for Building a Culture of Evaluation.

Want to learn more? Join me at 11:00 a.m. PT on Thursday, October 13 for Building a Culture of Evaluation.

During this 20-minute webinar hosted by American Evaluation Association, we’ll explore issues around an organization’s willingness to engage in the process through real world examples and a concrete process on how you can build a culture of evaluation. More information & registration here.

To receive a discount, use the promo code evalrocks. If you have any trouble with the discount code or registration, please contact Benjimin at bborucki@eval.org. Please note: you will have to set up an American Evaluation Association account in order to register, even as a nonmember.

I’m excited to share with you that Evaluation into Action, LLC, is now an affiliate partner with Joan Garry’s Nonprofit Leadership Lab. I’ve been a big fan of Joan ever since we crossed paths a few years ago. What truly caught my attention is her 14 attributes to a thriving nonprofit. One of them was to have clear and measurable outcomes. And that means, you need to have program evaluation in place.

One great way to check out how valuable the Nonprofit Leadership Lab can be is to join Joan Garry for a jam-packed workshop that will give you the practical advice you need to strengthen your org and lead with more intention. Here are a few webinars you can take advantage of. Click the link to reserve your seat!

I’m so grateful for ongoing positive feedback for my book, Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs.Thanks to Jane T. for her review.

“This is my go-to book for program evaluation. Chari spells out in very clear language the process/steps of evaluation and gives great examples of how this works. It’s all about the impact of our programs and the “so what” of results. I teach program evaluation and design and recommend this book to my students, as well. Buy this book if you work in nonprofit – you won’t be sorry you did!!!!”

If you have my book, please consider writing a review and rating it wherever you purchased. It truly helps others find it more easily.

Communication. That’s the secret. If you want to increase the likelihood that you will have a higher response rate to your survey, communicate before, during and after your survey is administered. Let’s break it down into these three phases.

Before.

Do your participants sign up for your program and complete a registration form, intake, or something else? You can set the expectation that they will be asked to complete a survey, participate in a focus group, or other data collection activities because their feedback is critical to your ongoing program improvement. Include something like:

And, at least one month prior to survey administration, let them know it’s coming. This may be:

During.

It’s go time. Send out the survey and keep it open for at least 10 days. Depending on your audience, sometimes leaving it open for a month is the best course of action. While it’s out, continue to communicate the survey is open, their feedback is important, and reiterate the due date.

On the last day that the survey is open, be sure to emphasize that and ask for people to complete it.

After.

How many of you have completed a survey and have no idea what happened to the results? You may all have your hands raised. I know I do. Let’s change that. It’s important to share back key findings so people know their feedback was heard, and better yet, used. It creates a feedback loop.

After you’ve analyzed the data, share out key findings and how the data will be used. Something like

“Thank you for completing the survey! We had (insert number) completed surveys and key findings are as follows (insert 4-6 key points). Based on these data, we are going to take the following actions (insert those too!).”

If you want some examples of what these impact summary reports look like, take a look at this page for examples.

Do you teach in a nonprofit management program or know someone that does? Please share this new web page with them detailing how to use my book to support teaching program evaluation.

This fall, I’m teaching Program Evaluation at the University of Portland as a part of their Nonprofit MBA program. Visiting students are welcome but please note, this will be in person, on Wednesday evenings August 31 – December 14.

This course – BUS 591 Program Evaluation – covers the critical role program evaluation plays in a nonprofit and steps on how to do it. Topics include building staff buy-in, logic models, planning, measurable outcomes, survey design, program data management, data analysis/reporting, and communicating key findings.

For information on how to register as a visitor, contact Melissa McCarthy, MBA Program Director, mccarthy@up.edu. Feel free to contact me with any questions!

My book, Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs., has received favorable reviews in several academic journals. I am so grateful for this positive feedback. Many have found the book useful and I recommend it for evaluation students, professors of nonprofit management and anyone in your life that may be working to evaluate their program.

“This book represents a culture shift from exclusively chasing data to respond to funder requests to using data to learn…this book shows you how to integrate program evaluation into your day-to-day operations in order to improve programs and demonstrate your impact.”

Journal of Philanthropy & Marketing, February 2022

Book review by Dr. David Fetterman

Author, Empowerment Evaluation

President & CEO of Fetterman and Associates

“…the author adopts a strong cultural approach to evaluation. It is more a textbook to be used by teachers in master class and by trainers for mid-managers positions in the public and nonprofit sectors.”

VOLUNTAS: International Journal of Voluntary and Nonprofit Organizations (2022)

Book review by Andrea Bassi

Associate Professor in General Sociology

You can read the full review here. Please note, if you’re not a subscriber it is available for purchase.

“In Nonprofit Program Evaluation Made Simple, Chari Smith has produced a summary guide targeting professionals working in nonprofit organizations who are beginning their understanding of what is, and how to undertake, an evaluation. The tone of the book is bright and positive, the author clear, engaging, and professional.”

The Canadian Journal of Program Evaluation

Lisa M. P. O’Reilly, MPA CE

When you hear the term ‘logic model’ does a nervous quiver runs down your spine? Or the opposite – do you absolutely love them?

How people initially feel about the term definitely varies, but the bottom line is this: having a visual summary of what your program does and the difference it’s expected to make will benefit your organization.

Both logic models and impact models accomplish this, but in different ways. Both should be created collaboratively, to ensure all staff members are enrolled in defining the key components of the model.

What is a logic model? It is a linear one-page document that defines a program’s goals, activities, outputs, inputs, outcomes, and impact. Logic models serve an important role as a visual summary of the program. Ideally, it’s created at the start of program design.

The go-to resource for creating a logic model is W.K Kellogg Foundation’s Logic Model Development Guide (2004), which defines a logic model as follows: “The program logic model is defined as a picture of how your organization does its work—the theory and assumptions underlying the program. A logic model links outcomes (both short- and long-term) with program activities/processes and the theoretical assumptions/principles of the program.”

What is an impact model? It still answers the two core questions: what does your program do, and what change is expected as a result? But it departs from the traditional linear format to include whatever information and format your organization will use. The impact model reflects your organization’s culture, right down to brand colors, making it usable in development, marketing, and communications efforts.

Both models provide direction and clarity for program evaluation, along with an insight into what methodology and measurements to use and a framework for discussing program evaluation elements with staff. You be the judge of what is truly best. If you have to create a logic model to satisfy a funder, do that. If you prefer a customized visual summary, do an impact model instead. At the end of the day, you want a visual summary that depicts what your program does and what change is expected as a result. It is a cornerstone of developing a realistic and meaningful program evaluation plan. And, with the element of visualization, it literally gets everyone onto the same page.

If you want to learn more about the difference between logic and impact models, and how to use both of these in your organization’s work, register for my upcoming workshop Logic Models Made Simple… and Impact Models too! on Thursday, 5/12! Make sure to use the promo code evalmadesimple to receive a discount; note that the code is case sensitive.

(This blog post is a summary excerpt from my book Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs. Bonus: every book comes with free access to my companion website, which is full of free downloadable templates and resources you can use right away – look for access instructions in the book’s Introduction. Get your copy now on Amazon., Barnes & Noble, or wherever books are sold!)

Program evaluation is a vital tool for any professional in the nonprofit sector, but teaching these concepts to undergraduates new to the field can be challenging.

When I heard that Lander University’s Professor Dr. Shaunette Parker used my book Nonprofit Program Evaluation Made Simple. Get your Data. Show your Impact. Improve your Programs as a required textbook in her undergraduate program evaluation course this year. I couldn’t wait to hear all about it!

She shared that her students used the book (and the companion website) to help them create evaluation plans and data collection tools. Dr. Parker called the book a “real life tool” and was able to draw from her extensive experience in nonprofits while teaching. “It just felt very comfortable. I could stand behind the information so it wasn’t difficult for me to share.”

Watch our full 15 minute conversation now:

Western Michigan University Presents:

Eval Café: A Discussion with Chari Smith on Fostering Evaluation Organizational Cultures

Wednesday, 3/30 from 9-10am PST

More Info

Logic Models Made Simple… and Impact Models too!

Thursday, 5/12/2022 from 9-11am PST

Space is limited, grab your spot today.

Register Here

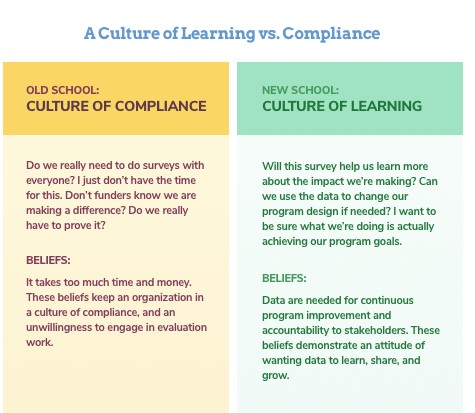

The intersection of program evaluation and organizational culture is critical to understand. Organizational culture relates to program evaluation in a very direct way: values and attitudes drive behaviors that make up the culture of your organization, and the way your staff feel and think about evaluation will ultimately dictate their behaviors and attitude towards the work of program evaluation itself.

Beth Kanter and Aliza Sherman provide this clear and definition of organizational culture in their book the Happy Healthy Nonprofit: Strategies for Impact without Burnout:

“Organizational culture is a complex tapestry made up of attitudes, values, behaviors, and artifacts of the people who work for your nonprofit.”

Think about this: are your staff doing evaluation because of a required mandate from funders or because of an actual desire to learn from the insights the data provides, and a desire to apply those learnings for continuous program improvement?

The former is more of a culture of compliance, and the latter is more a culture of learning.

As you read through the summarized image below that presents these two different cultures, ask yourself the following questions where does YOUR staff currently fall?

Even though every organization tends to have a wide range of attitudes toward program evaluation, from those who are enthused to those who are resistant, it’s unfortunately all too common for many organizations to be stuck in the yellow side of this image: a culture of compliance.

The natural next question I’m sure you have is this: “Chari, is it possible to help my team members make the shift from a culture of compliance a culture of learning? How?!”

I have good news for you – yes, a culture shift is possible!

Here are 3 steps to begin this shift from compliance to learning culture at your organization:

This blog post is a summary excerpt from my book Nonprofit Program Evaluation Made Simple: Get your Data. Show your Impact. Improve your Programs. Check out Chapter 4, Building a Culture of Evaluation, for more tips to help your organization learn to embrace program evaluation. Bonus: every book comes with free access to a companion website that is full of downloadable templates you can use right away – look for access instructions in the book’s Introduction. Get your copy now on Amazon or at Barnes & Noble!

Upcoming Events

Live Virtual Workshop:

Program Evaluation Made Simple

Thursday, October 21 | 9:00 a.m. – 10:15 a.m. Pacific

Event Info

*** This article was originally published in September 2018, and was updated and republished in June 2021

Outcome statements are change statements. They are critical to ensure you are collecting data that will inform program improvement efforts. They address the key question:

The statements provide the foundation from which all data collection questions will stem. Too often, organizations jump into creating surveys without outcome statements. The result can be asking questions that have nothing to do with understanding program impact or measuring progress. Like throwing darts at a board, hoping something will stick.

The process of creating measurable outcomes requires time, planning and collaboration. They provide direction, so when you’re ready to collect data what you ask is aligned to the outcome statements. You’ll throw darts, and hit a bullseye.

| What Does the Program Do? | What Change is Expected as a Result? | ||

|---|---|---|---|

| Program Activity | Outcome | Outcome | |

| Provide math and science classroom activities for at risk students | Students will improve their attitude toward math and science | Students will increase their interest in math and science | |

At a bare minimum, development and program staff come together to create these statements. Ideally, others are at the table depending upon the size of your organization. Development staff members can use outcome statements in their grant proposals and other fundraising activities as applicable. Program staff typically are the ones to collect the data. The result – development staff have the data they need to report to funders, and program staff have data they need to understand program successes and challenges. Bullseye!

This brief mini guide series goes into more detail on several evaluation subjects. The first one highlights how to create logic models and measurable outcomes.

Chari accurately captured the fundamental goals and mission of our organization and transformed our input into a clear evaluation process that helps us assess the impact of our programs on the lives of the families that we serve. Now we have an amazing way to measure the physical, emotional, and mental effects of our programs and to guide change, ensuring that we are delivering services in the most effective way possible.

Brandi Tuck, Executive Director, Path Home

Get periodic emails with useful evaluation resources and industry updates.

We promise not to spam you!